By 2025, the Zero Trust framework has evolved from theoretical concept into an indispensable cornerstone of cybersecurity, fundamentally reshaping how organizations secure their digital assets. Today, adopting a robust Zero Trust strategy isn’t merely a compliance checkbox—it’s an operational imperative. According to a recent Zscaler report, more than 80% of organizations plan to fully implement Zero Trust by 2026, underscoring its importance in achieving resilient cyber defense, secure third-party collaborations, and uninterrupted business continuity.

At the heart of the Zero Trust model lies a daunting challenge: continuous, real-time evaluation of access requests based on shifting factors like device security posture, user behavior, geographical location, and workload sensitivity. This dynamic environment generates an immense amount of data—far beyond the capability of human teams to manage manually. Here is where artificial intelligence (AI) steps in, becoming not just beneficial, but essential for scaling and automating adaptive trust assessments and risk management processes.

AI plays a pivotal role across all five Zero Trust pillars identified by CISA: identity, devices, networks, applications, and data. It does so primarily by filtering signal from noise, rapidly identifying potential threats, detecting sophisticated malware patterns, and applying advanced behavioral analytics to recognize anomalies that would otherwise go unnoticed. Imagine a scenario where a user suddenly downloads sensitive documents at an unusual hour from an unexpected location. AI-driven behavioral models instantly detect this deviation, evaluate its risk context, and trigger appropriate automated responses such as immediate reauthentication or session termination—without any lag or human intervention.

While AI broadly enhances Zero Trust, it’s critical to distinguish between its two primary categories—predictive and generative AI—and their distinct but complementary roles. Predictive AI, encompassing machine learning and deep learning models, leverages historical data to detect patterns and preemptively identify threats. Such AI tools bolster endpoint detection and response (EDR) systems, intrusion detection platforms, and behavioral analytics engines, enabling real-time risk assessment and proactive policy enforcement within Zero Trust frameworks.

Generative AI, represented by models such as ChatGPT and Gemini, takes on a different yet equally vital function. Rather than directly enforcing security controls, generative AI enhances human analyst capabilities by synthesizing complex information, streamlining investigative processes, generating targeted queries, and rapidly contextualizing data during high-pressure security incidents. These capabilities significantly reduce operational friction, empowering security teams to focus on strategic threat analysis and response planning.

Further pushing the boundaries of AI-driven security automation is Agentic AI, which transforms passive large language models into active, API-integrated agents capable of executing complex tasks autonomously. Such systems can orchestrate comprehensive Zero Trust procedures—gathering identity contexts, dynamically adjusting network micro-segmentation rules, managing temporary access protocols, and automatically revoking privileges once threats are mitigated. This advancement enhances response times, ensures enforcement consistency, and frees security personnel from routine tasks, enabling them to direct attention towards critical threat hunting and strategic oversight.

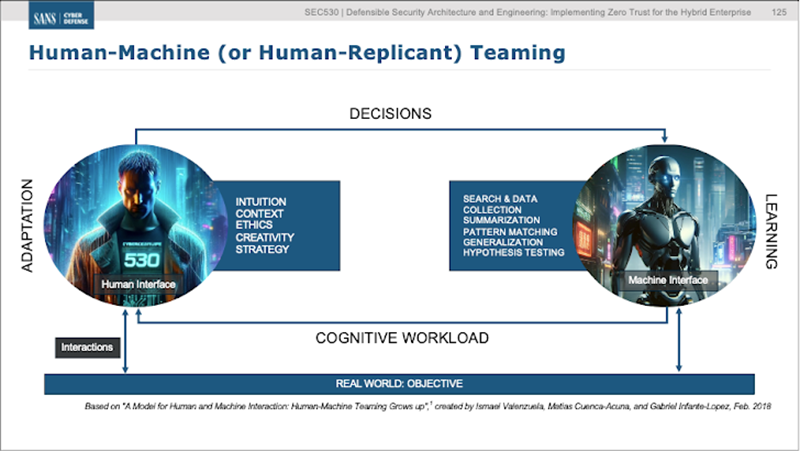

Despite AI’s undeniable strengths, its integration into Zero Trust architecture must embrace the principle of human-machine teaming. Predictive, generative, and agentic AI should function as specialized co-pilots—not replacements—for human analysts. AI-driven automation significantly augments human efforts by rapidly identifying threats and recommending responses, but it remains susceptible to manipulation, as highlighted by the SANS Critical AI Security Guidelines. Risks such as model poisoning, inference tampering, and data manipulation can undermine Zero Trust integrity if AI outputs are uncritically accepted.

To mitigate these risks, the SANS SEC530 Defensible Security Architecture & Engineering course emphasizes a balanced approach: AI processes vast volumes of data swiftly, but humans retain ultimate oversight, ensuring AI-driven decisions align with broader security goals. Human oversight ensures strategic policies are well-defined, system designs remain rigorous, and automated actions stay within safe operational boundaries.

Ultimately, the evolution of Zero Trust and AI isn’t about machines replacing human judgment—it’s about harnessing AI to amplify human capabilities. Machines excel in speed and data processing, but humans uniquely contribute essential context, ethical discernment, and nuanced judgment calls critical to resilient cybersecurity operations. As Zero Trust continues evolving, the most sustainable path forward relies on seamlessly integrating AI capabilities with human insight, ensuring comprehensive, robust, and agile security postures that keep pace with an increasingly complex digital landscape.